Simrann M Bhambani, a marketing professional at Flipkart, recently published a candid LinkedIn post titled “ChatGPT is TOXIC! (for me)”, revealing how her early curiosity about the AI tool for brainstorming and productivity developed into a troubling emotional reliance.

She explained that the chatbot initially served as a helpful digital companion but soon became a space where she shared “every inconvenience, every spiral, every passing emotion,” despite having a strong support network of friends.

According to her post, ChatGPT’s 24/7 availability, non-judgmental responses, and seeming understanding offered a comfort that human interaction did not always provide. It felt like a therapeutic outlet—but that safety net gradually turned into dependency and overload.

Bhambani noted, “It stopped being clarity and became noise,” as she poured energy into feeding each anxious thought into the AI, deepening her overthinking instead of easing it.

Recognising the psychological toll, Bhambani made the decision to uninstall ChatGPT from her devices. She reflected: “Technology isn’t the problem. It’s how quietly it replaces real reflection that makes it dangerous."

Her post has since gone viral, resonating with thousands of users who are grappling with emotional over-dependence on AI.

The post has inspired widespread commentary online. Some users commended her honesty and courage, calling her decision both “brave and necessary.” Others warned of the hidden dangers of relying on AI for emotional support, even unconsciously.

Community reactions highlighted caution about turning to chatbots for feelings rather than technical tasks.

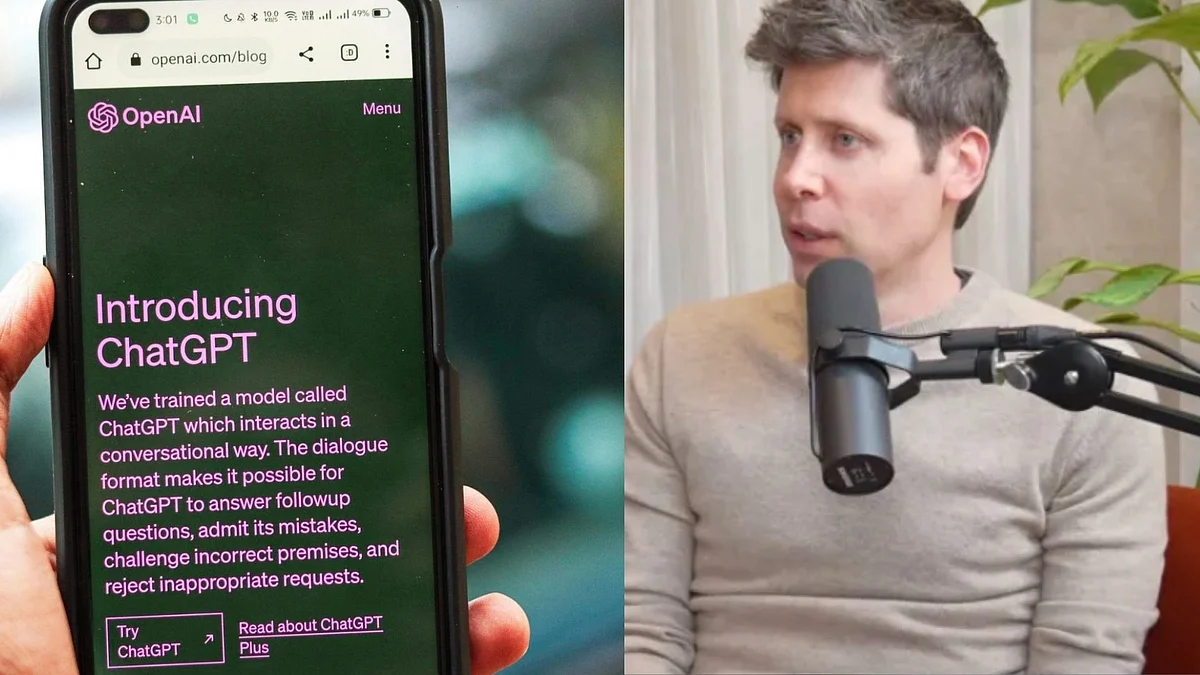

Recently, OpenAI CEO Sam Altman had also warned users that ChatGPT chats are not private and legally protected like therapy sessions. He noted that even deleted chats may still be retrieved for legal and security reasons.

"Right now, if you talk to a therapist or a lawyer or a doctor about those problems, there's legal privilege for it. There's doctor-patient confidentiality, there's legal confidentiality, whatever. And we haven't figured that out yet for when you talk to ChatGPT," Altman had said.