Experts in the field of artificial intelligence all agree on two things. The first is that AI, as a concept, is amazing and wonderful. It listens, it assimilates, it learns and it evolves. The second is that for all the above reasons, it is also terrifying.

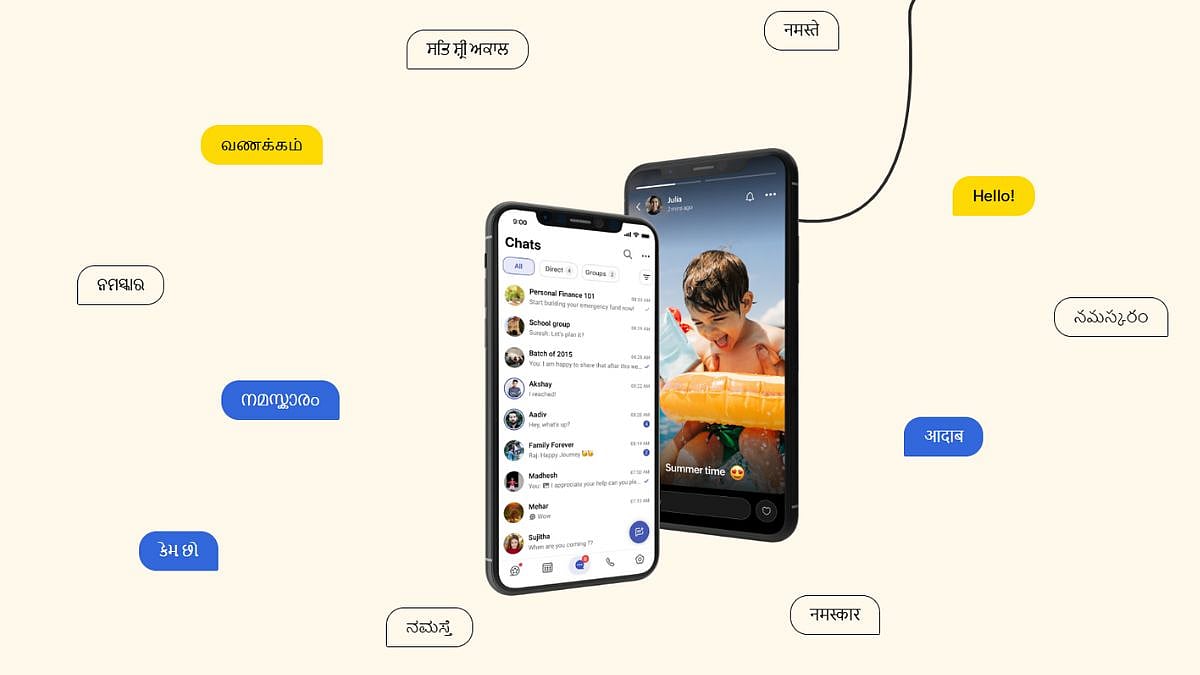

As with everything that was invented to make life easier, the impact of AI depends on the mindset of the user. WhatsApp, a fantastic messaging service, is one of the biggest mediums used for cybercrimes like sextortion today. QR codes, which have simplified digital payments, are the backbone of the OLX cyber-scam. Similarly, AI-powered deepfakes are fast on their way to becoming one of the cornerstones of the online pornography industry.

Deepfakes Came Into Light In Recent Years

The concept of deepfakes first burst into cyberspace around seven years ago, when complaints about morphed images of women being used for blackmail, or simply being uploaded to porn websites as revenge porn or perverse pleasure, started coming in. Cyber law enforcement officials noted that these weren’t the usual clumsy photoshop jobs.

The morphing was skillful and looked more like the real thing. Soon, the authorities found a website, named Deepnudes, which was generating explicit images of women for a price.

Today, deepfake technology is able to generate very realistic videos, based on short video samples or just seven to eight images. The technology basically studies the appearance and body language of the subject and makes an informed – or intelligent – guess as to what the person would look like in certain situations, like when engaged in sexual acts.

“It is about the psychology,” says a senior cybersecurity researcher who works with the Indian government. “Teachers, parents or bosses have always been the top fantasies of porn consumers around the world, after celebrities. Now, you have a technology that lets you generate such videos of the people you wish to see being degraded, from your favourite actress to your toxic boss. It gives you a feeling of power, to take their pictures and generate explicit videos tailored to suit your dark fantasies. You can take your favourite porn video from the internet, upload images or sample videos of the person you wish to target and wait for the technology to do the rest.”

Advancements In Deepfakes

What makes deepfakes scarier, the researcher says, is that they don’t just paste one face over another; they also match the skin tone and produce perfect expressions to suit the scenario, based on the laugh lines and wrinkles, which lets the AI guess how the face moves.

The best example of how convincing deepfakes are, came earlier this year, in the form of a picture of wrestlers Sangeeta and Vinesh Phogat, both of whom, along with other female wrestlers, were arrested for protesting against MP Brijbhushan Singh.

The duo had posted a selfie of themselves from inside the police vehicle and within the hour, an AImanipulated picture that showed them smiling started doing the rounds on Twitter. What was concerning was that the app used for the manipulation, an AI-powered app called Face App, was eerily accurate. The perpetrators of this manipulation were able to place realistic smiles on the faces of Sangeeta and Vinesh, as well as a man seated two rows behind them, while the cops’ faces were left untouched. And the smiles themselves were perfect, with even the eyes crinkling in a realistic way. And it gets worse. The same AI can also clone voices. This is not a hypothesis, it is a reality. In April this year, McAfee, a leading cybersecurity research firm, was able to clone the voice of one of its researchers with near perfect accuracy, using a tool available online. The results from this tool’s free version were 85 per cent accurate, while the paid version threw up a cloned voice almost indistinguishable from the real thing, complete with inflection and emotion.

And voices are easier to get than you might think. The same research also included a survey that found that 53 per cent of all adults share their voice online at least once a week on platforms like social media, with 49 per cent doing so up to 10 times in the same period. The practice is most common in India, with 86 per cent of people making their voices available online at least once a week, followed by the UK at 56 per cent and then the US at 52 per cent. In a related survey, McAfee also found that 47 per cent of Indians surveyed by its team had either fallen prey to AIenabled voice scams or knew someone who had. Of these, 86 per cent had lost money to such scams, with 48 per cent losing over Rs 50,000.

Possible Solution On Threat?

So what then, is the solution? Comprehensive legislation coupled with fierce enforcement. “It will take tremendous political willpower, as FIRs will need to be aggressively registered, arrests made and websites banned, none of which will happen without government backing. It will not happen overnight but unless there is fear of law, those with perverted mindsets will keep finding newer ways to abuse AI for the filthiest of purposes,” says a senior officer with the Mumbai Police.

And political will is where the rub lies. Case in point, there was zero outrage on the part of the government, or any government-affiliated agency or department when the Phogat sisters – literal survivors of sustained sexual assault – were targeted by AI. The outrage only began when actresses Rashmika Mandanna and Kajol ended up being the targets. And Prime Minister Narendra Modi only made a statement against it when he, in his own words, saw a deepfake of himself playing garba recently. Who, then, will bell this cat, that needed to be belled yesterday?