Update (September 18, 5.23pm IST): Google has issued a statement to Free Press Journal regarding this anomaly. "The Nano Banana image model that was recently launched was not trained with user data from Google Photos, Google Workspace, or Google Cloud services. Any non-visible attribute that shows up in a generated image that wasn’t visible in an input image in the same Gemini conversation is a coincidence," a Google spokesperson said.

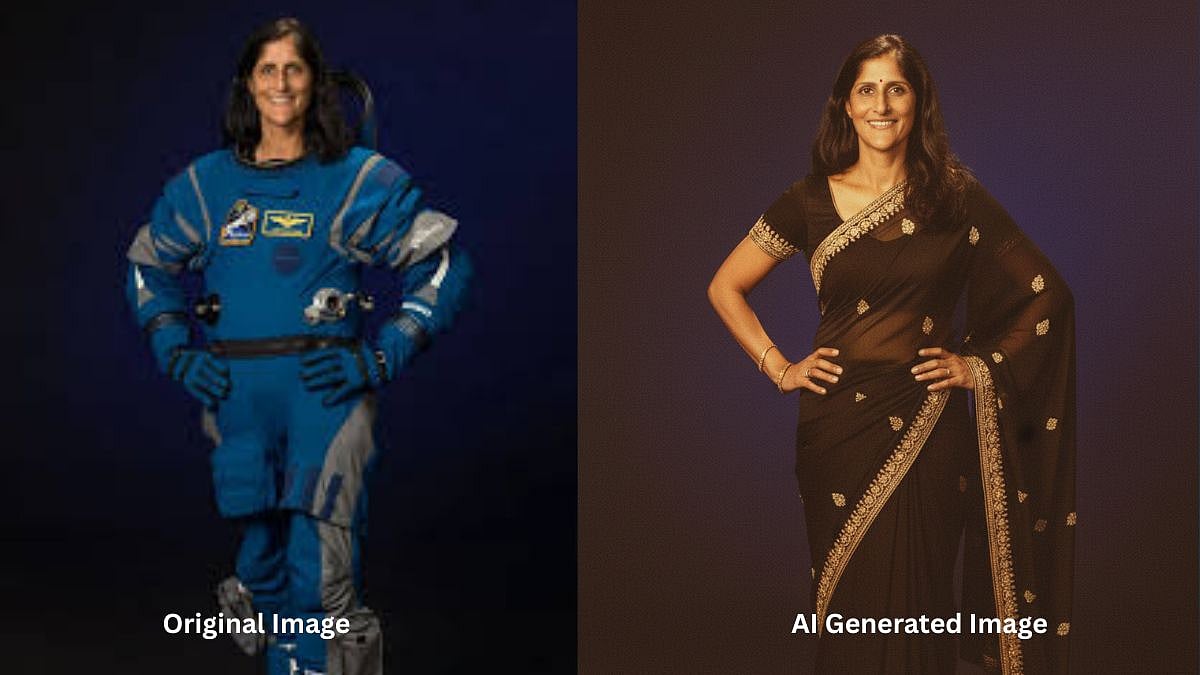

Google Gemini's Nano Banana AI saree trend, a viral sensation sweeping Instagram, transforms ordinary selfies into nostalgic 90s Bollywood-style portraits featuring flowing chiffon sarees, golden-hour lighting, and cinematic backdrops. However, a disturbing experience shared by Instagram user Jhalakbhawani has sparked concerns about the safety and privacy of AI-generated images.

The trend involves users uploading a photo to Google’s Gemini Nano Banana tool, paired with a prompt to create retro-inspired edits, such as polka-dot or black party-wear sarees with dramatic shadows and grainy textures reminiscent of classic Indian cinema. The process is simple: log into the Gemini app, select the “Try Image Editing” mode, upload a clear solo photo, and input a viral prompt to generate a Bollywood-style portrait within seconds.

Jhalakbhawani, an Instagram user, shared her unsettling experience in a post, after trying the trend. She uploaded a photo of herself in a green full-sleeve suit and used a prompt to generate a saree edit. The resulting image was striking, but she noticed an alarming detail. “There is a mole on my left hand in the generated image, which I actually have in real life. The original image I uploaded did not have a mole,” she wrote in her post. She questioned how the AI tool could know about a personal detail not visible in the uploaded photo, calling the experience 'scary and creepy' and urging followers to 'stay safe' when using AI platforms.

The incident, which gained significant attention online, has fueled discussions about AI safety. While Google incorporates safeguards like SynthID, an invisible digital watermark, and metadata tags to identify AI-generated images, experts warn these measures have limitations. According to aistudio.google.com, SynthID helps verify an image’s AI origin, but the detection tool is not yet publicly available, limiting its effectiveness for everyday users.

Experts recommend caution when participating in such trends. Users should avoid uploading sensitive images, strip metadata like location tags before sharing, and retain original photos to detect unauthorised changes.